What I learnt this month: Jan 2025

Rust

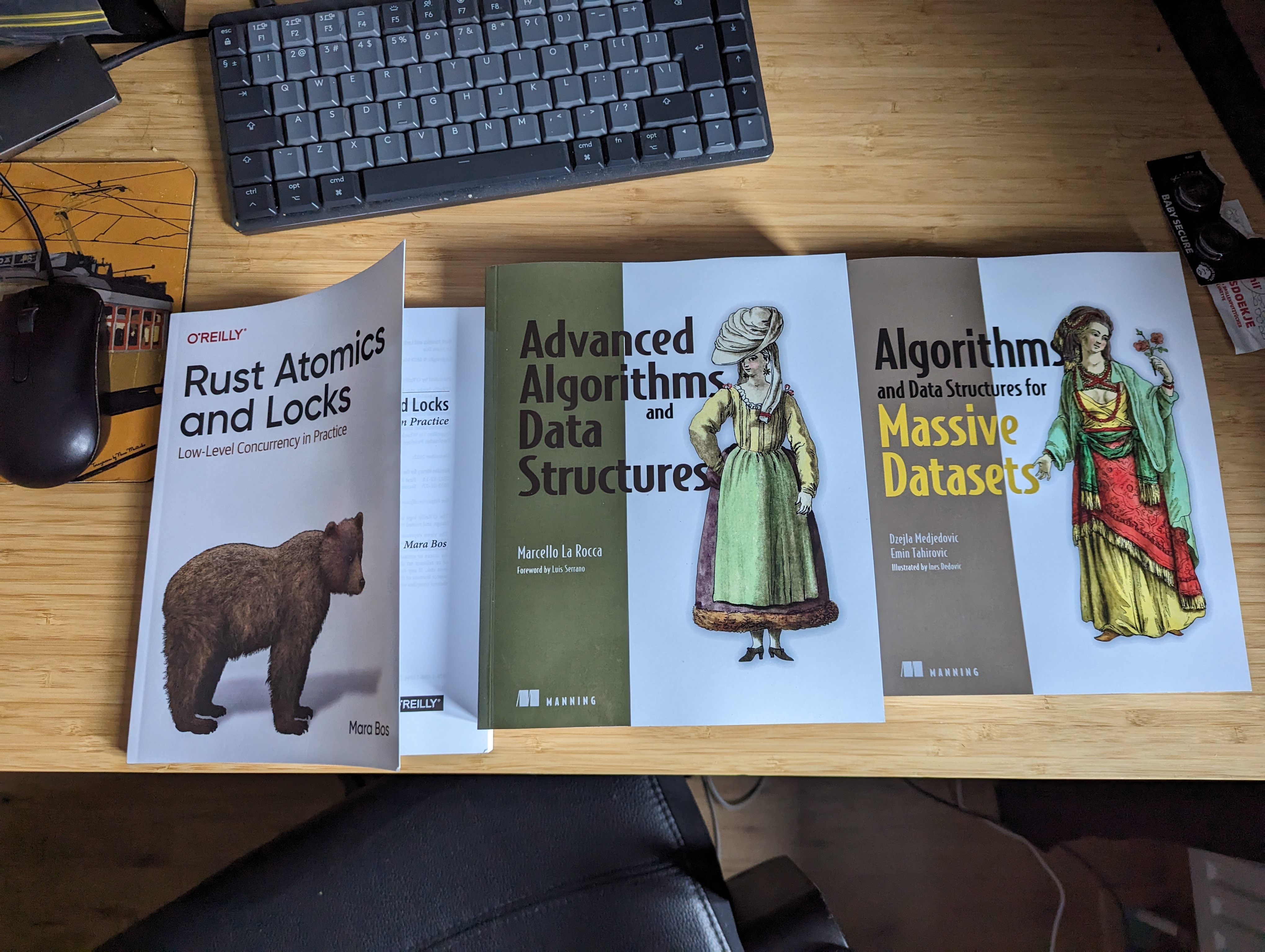

Started learning Rust. I have written code for experiments in Zig and C, but Zig is not a get paid to write code Job friendly language yet (unless you are cracked enough to work on the Bun team with Jared) and I do not want to write or deal with C and Makefiles for a living. I started learning Rust, but instead of learning it from scratch, I started reading the Rust Atomics and Locks book. It’s great. You should too!

Before reading the book, I used the Three Easy Pieces book to refresh my concurrency primitives knowledge, which turned out to be a good decision as I could compare and contrast the C and Rust implementation. The type system of Rust really helps (for example, when locking a Mutex to access a shared data structure. the return value of the Mutex’s .lock() method returns a reference to the data structure that could be manipulated and the reference dropped as soon we are done, unlocking the mutex). In contrast to C where the locking and manipulation has to be done by the user themselves.

By itself this is not a game changing difference, but small guarantees like these add up and I can see the value of such good API design and type system as we deal with large codebases with perhaps 10s of thousands of tests and millions of lines of code where all these potential points could result in a bug.

Large Dataset Algorithms

We all know about Trees, Hash Tables, Graphs, Lists etc. All these data structures work well enough when the data fits in a system’s memory. But what if the datasets are so big that they no longer do, such as counting the number of times an image on a e-commerce website was viewed ? The volume of the data makes even O(n) scaling algorithms slow. Are there algorithms or data structures that can give us an “approximate” estimate that are accurate enough, but in practice are faster by an order of magnitude or more ?

I had access to O’Reilly’s online platform for books (thanks to my employer) where I chanced upon the book Algorithms and Data Structures for Massive Datasets. I remember reading about the term HyperLogLog a long time ago, an algorithm to approximately count the number of distinct elements in a dataset used by systems like ElasticSearch. The book has a lot of interesting content on the design, implementation and most importantly the trade-offs of such data structures and algorithms. The topics cover Hashing, Sketch Data Structres, streaming data systems and External memory algorithms.

I have so far read through the first 4 chapters and learnt a ton about hashing, notably Bloom Filters, their usage with Sorted String Tables in many Key Value Databases ( also their limitations such as the inability to resize without storing the original keys). In contrast, Quotient Filters hash keys into a n-bit value and split them into a quotient and a remainder part (kinda like a set-associative cache or paging scheme in RAM) of q and r bits. They keys with the same quotient but varying r are sorted and stored as a consecutive run in the table, which makes it possible and easier to resize the filter later. Resizing can be done as trivially as stealing the most significant bit from the remainder and appending it to the quotient to expand the size of the filter !

I didn’t stop there, some digging into the rabbithole of hashing schemes, brought me to the Cuckoo Hashing scheme where the hash table is split into n smaller tables and each each table can be looked-up in parallel with a more complicated insert scheme. The table has interesting properties that it can support high load factors while still maintaining very fast lookup times ! Boy was I surprised by what came next as I searched further on Cuckoo Hashing, a KV store implemented on an FPGA.

An FPGA has SRAM elements spread across the chip, thus making the parallel table lookups extremely suitable to the architecture of the FPGA. The author is an absolute madlad to have implemented a networked hashtable that can do lookups in the order of 2-3 cycles ! And he didn’t just stop there, he wrote a Hardware Description Language from scratch based on Haskell, to implement, verify and synthesis his designs. If there ever were a top 10 list of cracked developer ideas, this for sure would have a place on it.

There are other hashing schemes like Robinhood hashing, that similar to the logic of an LRU cache, evicts items with the least/most displacement to another slot when the key to be inserted is hashed to a slot that is already occupied. I haven’t dug too deep into this, but there are some interesting repos with write ups on the performance of such hashmaps against other designs such as the STL HashMap from C++ or Google’s Abseil.

What next ?

The Rust Atomics and Locks book had been a great start so far. I also got lucky with the timing as just last month I decided to read through and re-implement the Mutex implementation from nsync to better understand how Mutexes are implemented from the ground up (from scratch seems to be too cliched right now, so I am restoring to a less cringy alternative)

The nsync library got highlighted by Justine Tunney’s the fastest mutexes post where she benchmarked various mutex implementations for performance and got so impressed by nsync that she decided to use it as a part of Cosmopolitan LibC.

nsync mutexes are not just mutexes but also can be used as an Reader-Writer lock, where multiple readers can lock the same mutex concurrently, or just one writer can hold the lock at any point in time.

The implementation is also very clever, to avoid starvation of writers or readers when the lock comes under heavy contention. Even though the mutex specific code is around a few hundred lines of code, the logic is very dense and with no accompanying design documentation, it will be an interesting study on the design and implementation of a high performance mutex. Stay tuned for the next month!